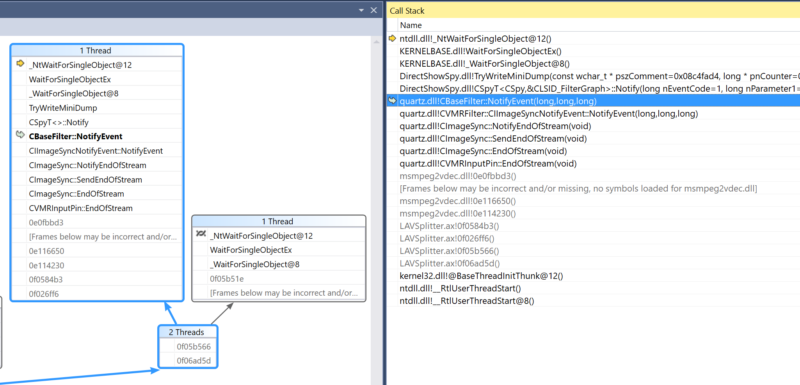

There is so little information about this problem (not really a bug, rather a miscalculation) out there because it is coming up with customized Video Mixing Renderer Filter 7 and there is no problem with straightforward use.

In windowless mode the renderer is accepting media samples and displays them as configured. IVMRWindowlessControl::GetCurrentImage method is available to grab currently presented image and obtain a copy of what is displayed at the moment – the snapshot. The renderer is doing a favor and converts it to RGB, and the interface method is widely misused as a way to access uncompressed video frame, esp. in format compatible with other APIs or saving to bitmap (a related earlier post: How To: Save image to BMP file from IBasicVideo or VMR windowless interface).

One of the problems with the method is that it reads back from video memory, which is – in some configurations – an extremely expensive operation and is simply unacceptable because of its impact overall.

This time, however, I am posting another issue. By default VMR-7 is offering a memory allocator of one media sample. It accepts a new frame and then blits it into video device. Simple. With higher resolutions, higher frame rates and in the same time having VMR-7 as a legacy API working through compatibility layers, we are getting into situation that this presentation method becomes a bottleneck. We cannot pre-load next video frame before getting back from presentation call. For 60 frames/second video this means that with any congestion 17 millisecond long we might miss a chance to present next video frame of a video stream. Virtual artifact and these things are perceptible.

An efficient solution to address this problem is to increase number of buffers in video renderer’s memory allocator, and then fill buffers asynchronously. This does work well: we fill the buffers well in advance, the costly operation does not have to complete within frame presentation time frame. Pushing media pipeline pre-loads video buffers in efficient way and then video renderer simply grabs out of the queue a prepared frame and presents it. Terrific!

The video renderer’s input is thus a queue of media samples. It keeps popping and presenting them matching their time stamps against presentation clock waiting respective time. Now let us have a look at snapshot method signature:

HRESULT GetCurrentImage(

[out] BYTE **lpDib

);

We have an image, that’s good and now the problem is that it is not clear which sample from the queue this image corresponds to. VMR-7 does not report associated time stamp even though it has this information. The problem is that it could have accepted a frame already and returned control, but presentation is only scheduled and the caller cannot derive the time stamp even from the fact that renderer filter completed the delivery call.

Video Mixing Renderer 9 is presumably subject to the same problem.

In constrast, EVR method’s IMFVideoDisplayControl::GetCurrentImage call is already:

HRESULT GetCurrentImage(

[in, out] BITMAPINFOHEADER *pBih,

[out] BYTE **pDib,

[out] DWORD *pcbDib,

[in, out] LONGLONG *pTimeStamp

);

That is, at some point someone asked the right question: “So we have the image, where is time stamp?”.

Presumably, VMR-7 custom allocator/presenter can work this problem around as presenter processes the time stamp information and can reports what standard VMR-7 does not.